The standard C library used with GCC is glibc, it provides POSIX standard functions for timing, sleeping, etc. On Unix platforms such as Solaris on Sparc, HP/UX on PA-RISC these can provide very high resolution timing, nanosecond to microsecond. On Linux 2.6 the resolution is typically 4ms, earlier versions used to be 1ms but certain machine configurations would fail as the timing routine would take longer than 1ms to execute.

Special Linux kernel versions are appearing that support real time or 1ms or finer resolution, for example SUSE Linux Enterprise Real Time (SLERT) or Ubuntu Studio. Using the latter allows microsecond timing with the gettimeofday() function and usleep() to 1ms resolution. In order to get finer grain sleeps we have to create our own routines, a basic loop checking the current time until the microsecond period has expired will do. One caveat that on single core systems the thread in the loop is likely to take all the CPU time, we need to yield the processor to other threads if the timer hasn't expired. In Linux we can use sched_yield(), to be platform we would want to use pthread_yield() however this does not exist with NPTL threads so we can use the Glib thread API version g_thread_yield() instead.

A custom high resolution sleep function doesn't immediately help with a Glib abstracted event loop with timer management either. We need to add a new source to the event loop that can fire events at the new microsecond resolution. To implement this we can derive from the existing timer source base, if the requested sleep time has a low resolution component, e.g. 1.5ms, we can use the existing timer to sleep for 1ms then take over with our high resolution timer for the remaining 500us. The new source is an idle source, that is executes when no other high priority events need to be processed. Effectively Glib is going to run a select()/poll() with a timeout and then execute all the idle sources and repeat. With a low resolution timer the select()/poll() manages the timeout, for high resolution timing it runs with a zero timeout.

In a standard PGM transport we might expect hundreds to thousands of timers awaiting to be fired, from sending session keep alive messages (SPMs) to re-requesting lost data (NAKs). We want to minimize the number of high resolution timers, and minimise overhead of changing timers due to incoming data or receiver state changes and we can do that by managing the entire transport timers internally and presenting one global timer to the underlying Glib event loop. The following diagram shows the two sides of the transport, one of three timers per packet on the receive side: NAK_RB_IVL for NAK request back-off, NAK_RPT_IVL to repeat send a NAK, and NAK_RDATA_IVL to wait for a RDATA if seeing a NAK confirm (NCF); the send side includes an ambient SPM keeping the session alive, and heartbeat SPMs to help flush out trailing packets that might have been lost.

Once Linux implements high resolution timers for select()/poll() this method is no longer required and we should expect improved CPU usage on the timer thread.

Sunday, 24 June 2007

Friday, 11 May 2007

Ceci n’est pas une pipe

Signals on many platforms are completely not-thread safe, this being due to the delivery by an interrupt which can halt execution mid-way through a function call. Checking the man page signal(2) POSIX.1-2003 lists safe functions to call in a signal handler:

_Exit() _exit() abort() accept() access() aio_error() aio_return() aio_suspend() alarm() bind() cfgetispeed() cfgetospeed() cfsetispeed() cfsetospeed() chdir() chmod() chown() clock_gettime() close() connect() creat() dup() dup2() execle() execve() fchmod() fchown() fcntl() fdatasync() fork() fpathconf() fstat() fsync() ftruncate() getegid() geteuid() getgid() getgroups() getpeername() getpgrp() getpid() getppid() getsockname() getsockopt() getuid() kill() link() listen() lseek() lstat() mkdir() mkfifo() open() pathconf() pause() pipe() poll() posix_trace_event() pselect() raise() read() readlink() recv() recvfrom() recvmsg() rename() rmdir() select() sem_post() send() sendmsg() sendto() setgid() setpgid() setsid() setsockopt() setuid() shutdown() sigaction() sigaddset() sigdelset() sigemptyset() sigfillset() sigismember() signal() sigpause() sigpending() sigprocmask() sigqueue() sigset() sigsuspend() sleep() socket() socketpair() stat() symlink() sysconf() tcdrain() tcflow() tcflush() tcgetattr() tcgetpgrp() tcsendbreak() tcsetattr() tcsetpgrp() time() timer_getoverrun() timer_gettime() timer_settime() times() umask() uname() unlink() utime() wait() waitpid() write()

The popular method to handle signals is then through a pipe to an event loop, read "Catching Unix signals" for a Gtk example.

Using pipes is also a popular mechanism for multiple threads to communicate with each other, with the PGM transport the application needs to be notified only when contiguous data is available, handling of out of order sequence numbers and NAK requests should be transparent. However it only need be used as a thread-safe signalling mechanism, so for zero-copy we simply use a shared memory structure for the actual data to pass, in this case via a Glib asynchronous queue. A pipe can be used in a select() or poll() call, the thread can then sleep until data is available, otherwise a constant loop checking shared memory would be necessary with the side effect of starving other threads of processor time.

_Exit() _exit() abort() accept() access() aio_error() aio_return() aio_suspend() alarm() bind() cfgetispeed() cfgetospeed() cfsetispeed() cfsetospeed() chdir() chmod() chown() clock_gettime() close() connect() creat() dup() dup2() execle() execve() fchmod() fchown() fcntl() fdatasync() fork() fpathconf() fstat() fsync() ftruncate() getegid() geteuid() getgid() getgroups() getpeername() getpgrp() getpid() getppid() getsockname() getsockopt() getuid() kill() link() listen() lseek() lstat() mkdir() mkfifo() open() pathconf() pause() pipe() poll() posix_trace_event() pselect() raise() read() readlink() recv() recvfrom() recvmsg() rename() rmdir() select() sem_post() send() sendmsg() sendto() setgid() setpgid() setsid() setsockopt() setuid() shutdown() sigaction() sigaddset() sigdelset() sigemptyset() sigfillset() sigismember() signal() sigpause() sigpending() sigprocmask() sigqueue() sigset() sigsuspend() sleep() socket() socketpair() stat() symlink() sysconf() tcdrain() tcflow() tcflush() tcgetattr() tcgetpgrp() tcsendbreak() tcsetattr() tcsetpgrp() time() timer_getoverrun() timer_gettime() timer_settime() times() umask() uname() unlink() utime() wait() waitpid() write()

The popular method to handle signals is then through a pipe to an event loop, read "Catching Unix signals" for a Gtk example.

Using pipes is also a popular mechanism for multiple threads to communicate with each other, with the PGM transport the application needs to be notified only when contiguous data is available, handling of out of order sequence numbers and NAK requests should be transparent. However it only need be used as a thread-safe signalling mechanism, so for zero-copy we simply use a shared memory structure for the actual data to pass, in this case via a Glib asynchronous queue. A pipe can be used in a select() or poll() call, the thread can then sleep until data is available, otherwise a constant loop checking shared memory would be necessary with the side effect of starving other threads of processor time.

Like a jigsaw puzzle

Having the pieces first obviously helps, and we have already created separate receive and transmit windows together with the necessary network socket details. We want to define a new object that incorporates both receiver and transmit side functionality and manages all the network specific details for us. Independently we can investigate what kind of API we want to see by creating new basic send and receiver tools: pgmsend and pgmrecv derived from previously created basic_recv_with_rxw and stream_send_with_nak. The following diagram shows all the components that are affected:

That's getting a bit complicated to view from a functional level so lets have a look at the combined data flow diagram:

The TX/RX queue refers to of the operating system, the asynchronous event queue and event loop is determinable upon the integration framework. Currently integration is with the Glib event loop however the event hooks can easily be redirected to a Windows native, Qt, or any other. It is also not necessary for the PGM event loop to be a separate from the application event loop, although only recommended for low data rate applications.

That's getting a bit complicated to view from a functional level so lets have a look at the combined data flow diagram:

The TX/RX queue refers to of the operating system, the asynchronous event queue and event loop is determinable upon the integration framework. Currently integration is with the Glib event loop however the event hooks can easily be redirected to a Windows native, Qt, or any other. It is also not necessary for the PGM event loop to be a separate from the application event loop, although only recommended for low data rate applications.

Monday, 23 April 2007

Cost of Time

Network protocols have a heavy dependency on time: when should a packet be resent? Will I ever receive this packet? Is the other party still running? The PGM protocol defines many timers in the receiver for determining packet state: NAK_RB_IVL, NAK_RPT_IVL and NAK_RDATA_IVL. There are also many different methods of calculating time, from POSIX gettimeofday() & clock_gettime(), Windows QueryPerformanceCounter() & _ftime() to Intel's RDTSC & RDTSCP instructions. The Glib suite defines a GTimer to provide some abstraction but uses doubles and hence potential expensive floating-point math.

So one question is what kind of overhead can one expect with Glib timers? Here is a graph with timers:

Now removing the timers completely and re-running gives the following results:

The test series "sequence numbers in jumps" causes generation of many NAKs each requiring its own times tamp for expiry detection, 68% of the processing is simply getting the current time!

So one question is what kind of overhead can one expect with Glib timers? Here is a graph with timers:

Now removing the timers completely and re-running gives the following results:

Thursday, 19 April 2007

Gimme that packet

So we're sending data with a transmit window to handle reliability how about a receive window to process, re-order, and request re-delivery of lost packets for reliable transfer? If we take a similar architecture to the transmit window we have something like this:

A fixed pointer array defines the maximum size of the receive window, at run time a container is assigned to function as a place holder for lost packets, or container for received data. Memory is pooled through a slab allocator and managed with a trash stack for optimum performance. The trail refers to the trailing edge of the non-contiguous data rather than RXW_TRAIL.

When a packet is received it is inserted into the receive window, if non-contiguous a series of place holders are generated which are used to manage the sequence number receive state as per the flow chart in the draft specification:

Flow chart of receive state as per draft RFC 3208.

In order to allow rapid timer expiration a series of queues are maintained for each receive state, the queues are made available for external access in order to protocol tweaking for either low latency (MDS), large object transfer (files), broadcast (video streaming) purposes.

After implementation of rxw.c we can perform basic performance tests (basic_rxw.c) to compare with the transmit window implementation. In order for a fair comparison of overheads we define three tests: one a basic fill of the receive window without committing data, two to fill in the window in reverse order, and a third to skip every other sequence number to alternate between inserting data and a place holder.

This graph shows that for basic fills performance exceeds the transmit window and worst case scenarios significantly lag behind but not overly unreasonably and little difference between 100k and 200k packets.

The magnitude of difference between send and receive side underscores some important design decisions that need to be made for implementation. In many typical environments the server host would be a high speed AMD64 Linux box whilst the clients are mid-speed Intel Windows boxes amplifying any disadvantage of receive side processing. So can we improve the receive side performance, for example by removing the place holder per sequence number and grouping together ranges? The results of a profile run:

Flat profile:

Each sample counts as 0.01 seconds.

% cumulative self self total

time seconds seconds calls ms/call ms/call name

37.10 0.27 0.27 7200000 0.00 0.00 rxw_alloc

24.05 0.45 0.18 7200000 0.00 0.00 rxw_push

13.74 0.55 0.10 7200000 0.00 0.00 rxw_state_foreach

9.62 0.62 0.07 5400012 0.00 0.00 rxw_pkt_free1

6.87 0.67 0.05 8999988 0.00 0.00 rxw_alloc0_packet

5.50 0.71 0.04 5399940 0.00 0.00 rxw_pkt_state_unlink

1.37 0.72 0.01 12 0.83 15.75 test_basic_rxw

0.69 0.72 0.01 5400012 0.00 0.00 on_pgm_data

0.69 0.73 0.01 3599964 0.00 0.00 on_send_nak

0.00 0.73 0.00 48 0.00 0.00 rxw_window_update

0.00 0.73 0.00 12 0.00 14.91 test_fill

0.00 0.73 0.00 12 0.00 14.91 test_jump

0.00 0.73 0.00 12 0.00 14.91 test_reverse

These results show more time handling packets (61%) than place holders (21%) with 14% NAK list overhead, similarly with oprofile:

Flat profile:

Each sample counts as 1 samples.

% cumulative self self total

time samples samples calls T1/call T1/call name

24.40 72479.00 72479.00 rxw_push

17.14 123399.00 50920.00 rxw_alloc

14.47 166397.00 42998.00 rxw_state_foreach

13.18 205554.00 39157.00 rxw_pkt_state_unlink

10.98 238170.00 32616.00 rxw_pkt_free1

6.50 257488.00 19318.00 rxw_alloc0_packet

6.45 276645.00 19157.00 rxw_ncf

2.27 283389.00 6744.00 on_pgm_data

1.32 287314.00 3925.00 _init

0.86 289872.00 2558.00 test_basic_rxw

0.77 292148.00 2276.00 test_reverse

0.76 294413.00 2265.00 test_jump

0.59 296154.00 1741.00 test_fill

0.24 296877.00 723.00 on_send_nak

0.07 297081.00 204.00 on_wait_ncf

0.00 297084.00 3.00 main

0.00 297085.00 1.00 __libc_csu_init

0.00 297086.00 1.00 rxw_window_update

41% time handling packets, 29% handling place holders with 15% NAK list overhead.

A fixed pointer array defines the maximum size of the receive window, at run time a container is assigned to function as a place holder for lost packets, or container for received data. Memory is pooled through a slab allocator and managed with a trash stack for optimum performance. The trail refers to the trailing edge of the non-contiguous data rather than RXW_TRAIL.

When a packet is received it is inserted into the receive window, if non-contiguous a series of place holders are generated which are used to manage the sequence number receive state as per the flow chart in the draft specification:

Flow chart of receive state as per draft RFC 3208.

In order to allow rapid timer expiration a series of queues are maintained for each receive state, the queues are made available for external access in order to protocol tweaking for either low latency (MDS), large object transfer (files), broadcast (video streaming) purposes.

After implementation of rxw.c we can perform basic performance tests (basic_rxw.c) to compare with the transmit window implementation. In order for a fair comparison of overheads we define three tests: one a basic fill of the receive window without committing data, two to fill in the window in reverse order, and a third to skip every other sequence number to alternate between inserting data and a place holder.

This graph shows that for basic fills performance exceeds the transmit window and worst case scenarios significantly lag behind but not overly unreasonably and little difference between 100k and 200k packets.

The magnitude of difference between send and receive side underscores some important design decisions that need to be made for implementation. In many typical environments the server host would be a high speed AMD64 Linux box whilst the clients are mid-speed Intel Windows boxes amplifying any disadvantage of receive side processing. So can we improve the receive side performance, for example by removing the place holder per sequence number and grouping together ranges? The results of a profile run:

Flat profile:

Each sample counts as 0.01 seconds.

% cumulative self self total

time seconds seconds calls ms/call ms/call name

37.10 0.27 0.27 7200000 0.00 0.00 rxw_alloc

24.05 0.45 0.18 7200000 0.00 0.00 rxw_push

13.74 0.55 0.10 7200000 0.00 0.00 rxw_state_foreach

9.62 0.62 0.07 5400012 0.00 0.00 rxw_pkt_free1

6.87 0.67 0.05 8999988 0.00 0.00 rxw_alloc0_packet

5.50 0.71 0.04 5399940 0.00 0.00 rxw_pkt_state_unlink

1.37 0.72 0.01 12 0.83 15.75 test_basic_rxw

0.69 0.72 0.01 5400012 0.00 0.00 on_pgm_data

0.69 0.73 0.01 3599964 0.00 0.00 on_send_nak

0.00 0.73 0.00 48 0.00 0.00 rxw_window_update

0.00 0.73 0.00 12 0.00 14.91 test_fill

0.00 0.73 0.00 12 0.00 14.91 test_jump

0.00 0.73 0.00 12 0.00 14.91 test_reverse

These results show more time handling packets (61%) than place holders (21%) with 14% NAK list overhead, similarly with oprofile:

Flat profile:

Each sample counts as 1 samples.

% cumulative self self total

time samples samples calls T1/call T1/call name

24.40 72479.00 72479.00 rxw_push

17.14 123399.00 50920.00 rxw_alloc

14.47 166397.00 42998.00 rxw_state_foreach

13.18 205554.00 39157.00 rxw_pkt_state_unlink

10.98 238170.00 32616.00 rxw_pkt_free1

6.50 257488.00 19318.00 rxw_alloc0_packet

6.45 276645.00 19157.00 rxw_ncf

2.27 283389.00 6744.00 on_pgm_data

1.32 287314.00 3925.00 _init

0.86 289872.00 2558.00 test_basic_rxw

0.77 292148.00 2276.00 test_reverse

0.76 294413.00 2265.00 test_jump

0.59 296154.00 1741.00 test_fill

0.24 296877.00 723.00 on_send_nak

0.07 297081.00 204.00 on_wait_ncf

0.00 297084.00 3.00 main

0.00 297085.00 1.00 __libc_csu_init

0.00 297086.00 1.00 rxw_window_update

41% time handling packets, 29% handling place holders with 15% NAK list overhead.

Wednesday, 11 April 2007

1 + 2 = 3

In order to provide reliability the PGM protocol needs to be able to detect when packets have been corrupted, a double checksum is used, one by the operating system on the IP header and one in the PGM header for the entire PGM packet similarly to how UDP and TCP packets are described.

The IP header is often updated requiring the checksum to be recalculated by network elements, for example updating the multicast TTL in each router. For the payload modern network cards provide hardware checksum offload for UDP and TCP packets, however with PGM the checksum has to run in userspace so some tests are required to find an optimal routine. Aside from the actual calculation, which is a one's complement, a PGM API has to copy the payload from the application layer in order to add the PGM header (without I/O scatter gather) and store in the transmit window, we could calculate the checksum then memcpy() the packet or try to implement a joint checksum and copy routine.

First on a 3.2Ghz Intel Xeon.

The red line is a C based checksum and copy routine and leads a separate memcpy() and checksum to around 6KB packet size, an 64bit assembly routine from the Linux kernel performs worse above 1KB.

Now compare with a dual-core AMD Opteron based machine:

The separate checksum and memcpy() routines lead at 2KB, whilst the Linux assembly routines easily excel.

A quad-core Intel Xeon machine:

The assembly routine does significantly better than the original Xeon host, we need to convert tick time into real time to compare each graph though:

The dual-core AMD Opteron is the clear winner for this computation.

The IP header is often updated requiring the checksum to be recalculated by network elements, for example updating the multicast TTL in each router. For the payload modern network cards provide hardware checksum offload for UDP and TCP packets, however with PGM the checksum has to run in userspace so some tests are required to find an optimal routine. Aside from the actual calculation, which is a one's complement, a PGM API has to copy the payload from the application layer in order to add the PGM header (without I/O scatter gather) and store in the transmit window, we could calculate the checksum then memcpy() the packet or try to implement a joint checksum and copy routine.

First on a 3.2Ghz Intel Xeon.

The red line is a C based checksum and copy routine and leads a separate memcpy() and checksum to around 6KB packet size, an 64bit assembly routine from the Linux kernel performs worse above 1KB.

Now compare with a dual-core AMD Opteron based machine:

The separate checksum and memcpy() routines lead at 2KB, whilst the Linux assembly routines easily excel.

A quad-core Intel Xeon machine:

The assembly routine does significantly better than the original Xeon host, we need to convert tick time into real time to compare each graph though:

| 3.2GhzIntel Xeon | 1.6GhzQuad-core Xeon | 2.4GhzDual-core Opteron | |

| memcpy | 2.66 ms | 3.75 ms | 2.46 ms |

| cksum | 2.66 ms | 2.81 ms | 2.54 ms |

| linux | 3.60 ms | 2.12 ms | 0.63 ms |

The dual-core AMD Opteron is the clear winner for this computation.

I’ve Got my Bag Lets Go!

So the results tell that a combination of containers is going to be useful, we can use a pre-allocated pointer array to store the details about each entry in the transmit window to gain the best access speed, and a trash stack based pointer system for the actual payload.

Its probable performance might be boosted further by using chunks of page size aligned data and sharing between several entries in the window. In so doing the overhead of generating or checking time stamps when inserting or purging from the window can be reduced. In this current stage of development we are I/O bound not CPU bound and so we shall revisit later when there is a greater surrounding framework, and burden on CPU that can highlight the difference.

The trash stack keeps freed packets and payloads allocated to the process for future use, a first in last out policy makes it cache friendly too. One important side effect is that memory stays in the transmit window system once allocated and will be unavailable to the application, but that is part of the rational of choosing the maximum transmit window size, either in bytes, sequence numbers, or time duration. Returning memory to the slice allocator would still keep the memory allocated to the process for application use but previous tests have shown at a latency cost. Using the system malloc instead of the slice allocator would be even slower but on Linux allow the memory to return to the operating system, however not all systems are the same, for example Solaris malloc never frees memory from the application.

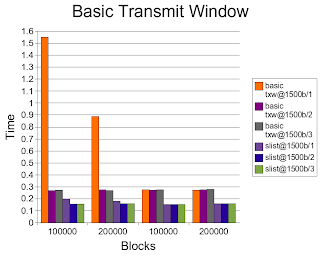

Here you can see the implementation txw.c is slower than a basic singly linked list, this appears the overhead of using a pointer buffer instead of byte buffer for the packet details. To test this we compare the pointer buffer implementation with a byte buffer (txw-byte.c), and a byte buffer with pointer index (txw-bytep.c) in case the multiply is slow.

The results show that in fact the pointer array implementation is faster than a byte array.

Its probable performance might be boosted further by using chunks of page size aligned data and sharing between several entries in the window. In so doing the overhead of generating or checking time stamps when inserting or purging from the window can be reduced. In this current stage of development we are I/O bound not CPU bound and so we shall revisit later when there is a greater surrounding framework, and burden on CPU that can highlight the difference.

The trash stack keeps freed packets and payloads allocated to the process for future use, a first in last out policy makes it cache friendly too. One important side effect is that memory stays in the transmit window system once allocated and will be unavailable to the application, but that is part of the rational of choosing the maximum transmit window size, either in bytes, sequence numbers, or time duration. Returning memory to the slice allocator would still keep the memory allocated to the process for application use but previous tests have shown at a latency cost. Using the system malloc instead of the slice allocator would be even slower but on Linux allow the memory to return to the operating system, however not all systems are the same, for example Solaris malloc never frees memory from the application.

Here you can see the implementation txw.c is slower than a basic singly linked list, this appears the overhead of using a pointer buffer instead of byte buffer for the packet details. To test this we compare the pointer buffer implementation with a byte buffer (txw-byte.c), and a byte buffer with pointer index (txw-bytep.c) in case the multiply is slow.

The results show that in fact the pointer array implementation is faster than a byte array.

Thursday, 5 April 2007

How Big is that Bag?

Standard ethernet packets usually 1,500 bytes long, on a typical home network this might vary because of ATM based internet connections, for high bandwidth environments this might increase with jumbo frames to 9,000 bytes and beyond with IPv6 jumbograms. So how does the size of a packet affect container performance in the transmit window?

The graph says it all, the different is minor.

Whats in my Bag?

To test the performance of random access of the window it is simple enough to simply iterate over the entire window and calculate the average time period.

These graphs put the earlier allocator tests into perspective, the linked lists, queue all perform abysmally for random access. The hash table just makes it into the graph and the arrays clearly excel.

These tests are all in basic_container2.

These graphs put the earlier allocator tests into perspective, the linked lists, queue all perform abysmally for random access. The hash table just makes it into the graph and the arrays clearly excel.

These tests are all in basic_container2.

Wednesday, 4 April 2007

Grab me a Bag

A transmit window stores a sequential series of packets of which any can be requested to be re-transmitted. So taking the engineering approach to answer the question: what is the best container? We test each container available in the glib toolkit for two performance properties: first sequentially adding items to the window, and second randomly accessing items in the window. From this we can draw some pretty graphs

A time penalty occurs for the first block allocations, this is when page faults occur and pages are assigned to the applications process space. Post allocation the time period for allocating remains constant.

Now we have three different allocators for the data in the container, first regular malloc, second a glib slice allocator, and third a pre-populated trash stack. Note that we are running all the tests in one application so only the first run suffers from the page fault penalty. The trash stack usage shows a clear performance advantage, whilst minimal difference between malloc and the slice allocator.

The graph gets rather busy, the y-axis deliberately culled to 0.5μs as the byte array times are 1000% worse. What we can tell is that singly linked lists are fractionally faster the doubly linked, but similar to queues and pointer arrays. Hash tables and byte arrays easily fair the worst.

These tests are all in basic_container.

A time penalty occurs for the first block allocations, this is when page faults occur and pages are assigned to the applications process space. Post allocation the time period for allocating remains constant.

Now we have three different allocators for the data in the container, first regular malloc, second a glib slice allocator, and third a pre-populated trash stack. Note that we are running all the tests in one application so only the first run suffers from the page fault penalty. The trash stack usage shows a clear performance advantage, whilst minimal difference between malloc and the slice allocator.

The graph gets rather busy, the y-axis deliberately culled to 0.5μs as the byte array times are 1000% worse. What we can tell is that singly linked lists are fractionally faster the doubly linked, but similar to queues and pointer arrays. Hash tables and byte arrays easily fair the worst.

These tests are all in basic_container.

So Super

Implementing a new network protocol that sits side by side with UDP/TCP rather than on top requires super-user privileges in order to create a raw socket (SOCK_RAW). Similarly for many network servers such as a web server super-user or root privileges are needed to open a port below 1024. A process with super-user rights has full access to the system but for a internet server this is not a good idea as a simple software defect could allow a hacker to gain full control of the host, or in rare circumstances the program could destroy the system installation itself. Therefore it is common for a server process to drop its super-user privileges after using them, but drop to whom?

The popular name is nobody, but what is the user identifier (UID) and group ID (GID) of nobody? -2 is popular, but both in 16 bit (65534) and 32 bit (4294967294) representation for example Linux and Mac OS X respectively. Some older platforms arbitrary values like 60001 for Irix, and even earlier implementations of Linux used 99.

Is it a bird? Is it a plane? No, its Superman Kubrick and a Bearbrick.

The popular name is nobody, but what is the user identifier (UID) and group ID (GID) of nobody? -2 is popular, but both in 16 bit (65534) and 32 bit (4294967294) representation for example Linux and Mac OS X respectively. Some older platforms arbitrary values like 60001 for Irix, and even earlier implementations of Linux used 99.

Is it a bird? Is it a plane? No, its Superman Kubrick and a Bearbrick.

And the Memory Leak Winner is …

Valgrind is a memory debugger, one of the big problems with large programs that run for any significant period of time is memory usage, a memory debugger allows a developer to diagnose where memory is being used, or lost due to programming error. Unfortunately many false negatives appear, or just fruity library calls that like leaving a mess exist, for example getprotobyname() generates this output:

==3068== Address 0x51250B8 is 16 bytes inside a block of size 23 alloc'd

==3068== at 0x4A2080E: malloc (vg_replace_malloc.c:149)

==3068== by 0x400865F: (within /lib/ld-2.4.so)

==3068== by 0x401119C: (within /lib/ld-2.4.so)

==3068== by 0x400D1E5: (within /lib/ld-2.4.so)

==3068== by 0x4010C0A: (within /lib/ld-2.4.so)

==3068== by 0x4DC313F: (within /lib/libc-2.4.so)

==3068== by 0x400D1E5: (within /lib/ld-2.4.so)

==3068== by 0x4DC32A6: __libc_dlopen_mode (in /lib/libc-2.4.so)

==3068== by 0x4D9F396: __nss_lookup_function (in /lib/libc-2.4.so)

==3068== by 0x4D9F462: (within /lib/libc-2.4.so)

==3068== by 0x4DA733B: getprotobyname_r (in /lib/libc-2.4.so)

==3068== by 0x4DA70EF: getprotobyname (in /lib/libc-2.4.so)

Which gets repeated with slightly different numbers for all of one call :eek:

==3068== Address 0x51250B8 is 16 bytes inside a block of size 23 alloc'd

==3068== at 0x4A2080E: malloc (vg_replace_malloc.c:149)

==3068== by 0x400865F: (within /lib/ld-2.4.so)

==3068== by 0x401119C: (within /lib/ld-2.4.so)

==3068== by 0x400D1E5: (within /lib/ld-2.4.so)

==3068== by 0x4010C0A: (within /lib/ld-2.4.so)

==3068== by 0x4DC313F: (within /lib/libc-2.4.so)

==3068== by 0x400D1E5: (within /lib/ld-2.4.so)

==3068== by 0x4DC32A6: __libc_dlopen_mode (in /lib/libc-2.4.so)

==3068== by 0x4D9F396: __nss_lookup_function (in /lib/libc-2.4.so)

==3068== by 0x4D9F462: (within /lib/libc-2.4.so)

==3068== by 0x4DA733B: getprotobyname_r (in /lib/libc-2.4.so)

==3068== by 0x4DA70EF: getprotobyname (in /lib/libc-2.4.so)

Which gets repeated with slightly different numbers for all of one call :eek:

Re-inventing the Wheel

Any significant application in the world that is supported on multiple platforms creates its own toolkit for performing basic functions like file and network I/O. This may be due to some functions not being available on different platforms: e.g. WSASocket on Windows, or simply a requirement for more advanced basic functions like file_get_contents() in PHP. Popular toolkits or frameworks include Glib (not to be confused with glibc), Qt (Trolltech), Mozilla, and Apache runtimes.

In choosing Glib we gain a well used thread-safe event manager and a native C API allowing purposing in C++ too, its also a petite library without tying to a graphics system like Gtk+ and can be built upon with GObjects and an embedded web server LibSoup to easily implement a web administrative interface.

A variety of toolkits or frameworks are available from different organizations under different licenses.

In choosing Glib we gain a well used thread-safe event manager and a native C API allowing purposing in C++ too, its also a petite library without tying to a graphics system like Gtk+ and can be built upon with GObjects and an embedded web server LibSoup to easily implement a web administrative interface.

A variety of toolkits or frameworks are available from different organizations under different licenses.

Create Tests then Implement

Test-driven development (TDD) can help to build software better and faster. Following this the next steps are to product a simple tool to view PGM packets on the network. Conveniently tcpdump already includes a level of PGM support and its packet parsing concept can be leveraged to speed up development. tcpdump can be also used to verify operation of the new tool pgmdump.

Now we need to generate some packets, easiest is to generate a simple original data (ODATA) packet and fill the payload with a basic string: basic_send. For a variation of packet SPMs can be generated on a timer, in effect implementing the ambient SPM function of the PGM protocol: spm_idle.

A PGM packet sent by basic_send can be received and decoded by pgmdump and tcpdump simultaneously.

Now we need to generate some packets, easiest is to generate a simple original data (ODATA) packet and fill the payload with a basic string: basic_send. For a variation of packet SPMs can be generated on a timer, in effect implementing the ambient SPM function of the PGM protocol: spm_idle.

A PGM packet sent by basic_send can be received and decoded by pgmdump and tcpdump simultaneously.

Tuesday, 3 April 2007

Rolling a Transport Protocol

How to write a network transport? Well, in this case the definition of the protocol and hence most of the hard work has already been done.

Pragmatic General Multicast (PGM) is a reliable multicast transport protocol, guaranteeing that recipients either receive data or detect that it is unrecoverable. TCP is an example of a reliable transport, however TCP is a point to point data stream between two parties. In order to send data to multiple parties, for example a voice or video stream with TCP you would have to initiate a separate connection with each party and explicitly send copies of the data to each recipient. If you had a 1mb/s data stream and a 10mb/s network you could only, in ideal conditions, send to 10 viewers. Using a different protocol called UDP we can have the same data broadcast by the network to all parties. Multicast is a slight variation in which, with supported network equipment, only parties who are interested in the data will receive it.

Unicast describes 1 to 1 delivery, broadcast for one to many, and multicast again for one to many.

UDP datagrams are unreliable and delivery is not guaranteed to be in the same order as sent. Therefore the sender has a transmit window to retain transmitted data for recovery, and each receiver has a receiving window to re-arrange and request lost messages.

After that and with an empty directory how do we move forward?

The first step is finding all the packet formats and detailing as structures in a new header file, imaginatively pgm.h. We discover all the packet types and define all the fields. Source Path Message (SPM) is used in a complex hierarchy to define the closest network element to request repairs from and to update the status of the transmit window. PGM uses negative acknowledgments (NAKs) to handle packet loss, so that in ideal conditions there is no network overhead for acknowledgments (ACKs) as with TCP.

Packet loss detected by sequence number gap by subsequent packets or SPMs.

Every data packet has a sequence number so for a constant stream of data a receiver can easily detect a gap in the sequence numbers and initiate recovery with the sender. What happens if the sender publishes one data message and then stops? SPMs are sent after data messages to indicate the last sequence number and allow receivers to recover.

Pragmatic General Multicast (PGM) is a reliable multicast transport protocol, guaranteeing that recipients either receive data or detect that it is unrecoverable. TCP is an example of a reliable transport, however TCP is a point to point data stream between two parties. In order to send data to multiple parties, for example a voice or video stream with TCP you would have to initiate a separate connection with each party and explicitly send copies of the data to each recipient. If you had a 1mb/s data stream and a 10mb/s network you could only, in ideal conditions, send to 10 viewers. Using a different protocol called UDP we can have the same data broadcast by the network to all parties. Multicast is a slight variation in which, with supported network equipment, only parties who are interested in the data will receive it.

Unicast describes 1 to 1 delivery, broadcast for one to many, and multicast again for one to many.

UDP datagrams are unreliable and delivery is not guaranteed to be in the same order as sent. Therefore the sender has a transmit window to retain transmitted data for recovery, and each receiver has a receiving window to re-arrange and request lost messages.

After that and with an empty directory how do we move forward?

The first step is finding all the packet formats and detailing as structures in a new header file, imaginatively pgm.h. We discover all the packet types and define all the fields. Source Path Message (SPM) is used in a complex hierarchy to define the closest network element to request repairs from and to update the status of the transmit window. PGM uses negative acknowledgments (NAKs) to handle packet loss, so that in ideal conditions there is no network overhead for acknowledgments (ACKs) as with TCP.

Packet loss detected by sequence number gap by subsequent packets or SPMs.

Every data packet has a sequence number so for a constant stream of data a receiver can easily detect a gap in the sequence numbers and initiate recovery with the sender. What happens if the sender publishes one data message and then stops? SPMs are sent after data messages to indicate the last sequence number and allow receivers to recover.

Subscribe to:

Comments (Atom)